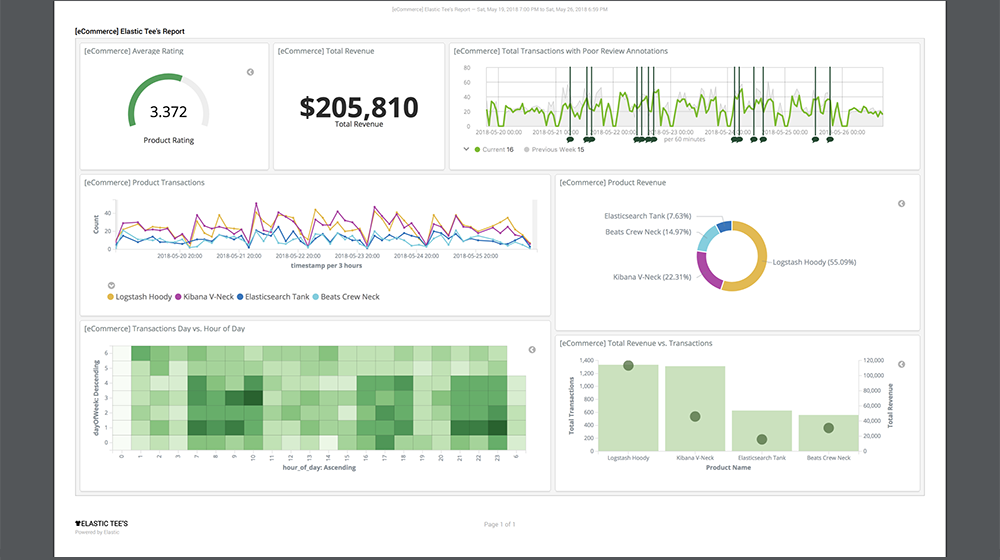

Here's another example in Kibana. You can pull the same data out and display in Grafana

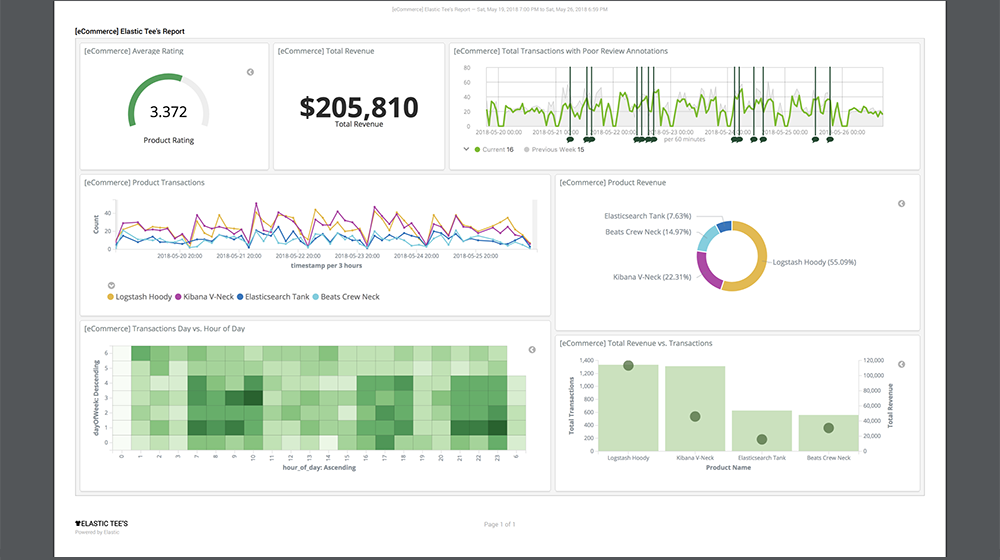

Here's another example in Kibana. You can pull the same data out and display in Grafana

Last place I was we had to set only certain ciphers and MACs, had banners, etc but that was a specific case. We also did limit the SSH access to only be from the config management server to not allow people to log into the VMs. The workstations were able to be SSH'd into.

Also fail2ban isn't going to do anything with only key auth. The access gets denied before it has a chance to do anything.

I found this out the hard way when I was essentially DOS'd from remotely accessing my system when someone did actually attempt to break in. The load on the system spiked for around 2 hours.

@JaredBusch said in SSH Hardening:

@stacksofplates said in SSH Hardening:

Here was a topic I had posted a while back

Good post, but total overkill to me. What is the point of the connection from an IT point of view if not to manage the system? Yet your hardening restricts any administration except at the local console.

I log in to systems via SSH in order to reboot them, or update WTF ever application they are running. Tasks that your security will stop.

That was just a jump box. It was a way to get in to other stuff to do the admin. It is overkill for you just had ideas if you wanted them.

@JaredBusch said in SSH Hardening:

@stacksofplates said in SSH Hardening:

Also fail2ban isn't going to do anything with only key auth. The access gets denied before it has a chance to do anything.Actually, it still catches it. I tested that.

At least on Fedora using

systemdit does.

Ah they must have changed it. It used to be SSH denied the request before it ever hit PAM so fail2ban did nothing.

So I finally read this trash. How is this goon a CISSP? The CA doesn't have access to the private key on your server. That's not how CAs work. So if someone "steals the CAs key" they can't just MITM your traffic with an existing key. It's amazing that this was even published....

@ingmarkoecher said in Why Let’s Encrypt is a really, really, really bad idea…:

@stacksofplates Yes, but it's also about preventing imposters - so you know that who you're talking to is who they claim they are.

That's not what the certs are for. If I buy www.ebays.co and make my site look exactly like ebay, the cert doesn't have a responsibility to ensure I'm at the real ebay site. The only thing the cert is for is to ensure my data is encrypted between my end and the remote end and that someone can't intercept it. That's the cert's only purpose.

@G-I-Jones said in Spiceworks Custom CSS HelpDesk Theme:

Anyone here using Spiceworks played with the CSSpice plugin?

Has anyone figured out a way to change icons?

It might be a little tedious for all of the icons, but if you want to change a main logo or something you can just base64 encode an image and use that as your CSS.

I took a picture of the Rancher logo and did that to show you:

Just do:

some-item {

background: url(" data:image/png;base64,<base64 encoded string>");

}

Don't use image/png if it's not a png, use the correct format.

@DustinB3403 said in Using Ansible to Manage install and update Apple OSX DHCP clients:

@stacksofplates So on your ansible server do you have a folder called playbooks and in that you have numerous different <something>.yml files each that do something?

It's up to personal preference. I store things in ~/Documents/projects/ansible. Then in that I have a playbooks directory and a roles directory. Playbooks has the playbooks I need which is a single git repo and then each role has it's own git repo under roles.

Your default ansible.cfg file is in /etc/ansible.cfg. It points you to /etc/ansible/hosts and /etc/ansible/roles I never use that. I always set an ansible.cfg in my playbooks directory. It overrides that and stores everything in that playbooks directory.

@Obsolesce said in Using Ansible to Manage install and update Apple OSX DHCP clients:

So you are going to have SSH open on everything while allowing root and/or password login?

TF?

Maybe tone it down a tad since you apparently don't understand what's happening. We are recommending using keys for authentication. Using the password only to set that up. Second where did the allowing root come from? That never came up. Third I know you're on the Salt is the savior of everything train, but SSH is just as secure as ZeroMQ. If you limit where SSH access can come from to a subnet (like @IRJ mentioned) or a single machine it's pretty much exactly what you have with ZeroMQ but just not a message bus.

Plus this is ignoring the fact that when you get to fully immutable infrastructure (I realize the Macs aren't that) you can leverage Ansible through tools like Packer to build your image and never need SSH after the fact because you don't ever log in again at all.

I don't see a ton of uses for it with a VPS. I usually create a template with something like Packer and Ansible to do the hardening and then just clone from that template, but that's using their base image. On providers like GCP you can determine what keys go with what users without creating templates. I still use templates though that I build with Packer and Ansible.

I back up with Borg to a Linux server. I was using Urbackup but Borg is so simple. I'll probably end up switching to Restock because their refactored code is supposedly faster than Borg now (used to be the other way). I also have a bias to things written in Go :face_with_stuck-out_tongue:

That server then backs up to Crashplan.

@travisdh1 said in Scripting partioning on AWS:

@IRJ said in Scripting partioning on AWS:

For anyone interested here are the CIS requirements.

https://secscan.acron.pl/ubuntu1604/1/1/2

https://secscan.acron.pl/ubuntu1604/1/1/6

https://secscan.acron.pl/ubuntu1604/1/1/7

https://secscan.acron.pl/ubuntu1604/1/1/12Note: we have Ubuntu 18.04 , but these requirements are the same.

Out of the 4 you linked to here, only adding nodev to /tmp even makes sense

Resizing filesystems is a common activity in cloud-hosted servers. Separate filesystem partitions may prevent successful resizing, or may require the installation of additional tools solely for the purpose of resizing operations. The use of these additional tools may introduce their own security considerations.

I know, DoD, can't question the system, yadda, yadda. Still doesn't mean I don't call out stupid when I see it.

I can see value to /var/log. You don't want runaway logs to fill up /. Separating /var and /var/log is much less useful. I can see /home too if it's an interactive system. If not then it's not that useful. Setting nosuid I think is valuable for /tmp also to help ensure something can't be elevated past normal privileges.

Do they also have you remove LVM and all the tooling for LVM?

We didn't have to for DISA STIGs, that's how we created the volumes.

@matteo-nunziati said in how do you deal with SPOF with HAproxy:

@stacksofplates said in how do you deal with SPOF with HAproxy:

Keepalived is most likely what you're looking for. You assign a VIP to your interface and it keeps a heartbeat between the systems. The VIP (floating IP) will move between systems if there is an issue.

Once that's set up, use Serf to update your HAProxy configs or Consul for automatic service discovery which HAProxy can read.

If you're using a cloud provider, I'd just use one of their provided load balancers.

+1 for serf but I can not find any doc about serf as a distributed config (as consul).

I know about serf as a discovery/alive tool only...

When systems send their messages to the cluster they can run a script depending on the message. Their example repository has simple Bash scripts to update an HAProxy config.

https://github.com/hashicorp/serf/blob/master/demo/web-load-balancer/README.md

It's very very simple, but sometimes that's easier than setting up a full Consul cluster.

@scottalanmiller said in MSP Helpdesk Options:

@flaxking said in MSP Helpdesk Options:

Zammad

Anyone tried this? Looking at the screen shots and it looks useless. LOL I can't tell where you ever see ticket lists or details. They show a lot of things that don't matter, but never show actually using the system.

Yeah I spun it up one time. It's not bad. Just spin up a container to test. That's the quickest way to look at it.

What does terraform state list show?

They should be in journalctl right?

So I don't have a ton of experience with the AWS side, but with GCP you define your startup scripts like this:

Here's what's in my script:

#!/bin/bash

sudo touch /etc/the_file

sudo echo "is this working?" >> /etc/the_file

cat /etc/this-file-doesnt-exist

Then after boot, you can run jouranlctl --boot and it gives you logs since last boot.

@Pete-S said in SSH tunneling/gateway question:

OK, this is what I ended up doing.

I wanted the remote server to have access to a local repository served over http. This works with any kind of traffic over tcp though, as it's not a web proxy but tcp forwarding.

I set up the files and served the website on the ssh client machine with PHPs build-in server. It's easy to use and requires no setup. You just start it in the base directory you want to serve. I used port 8000.

php -S localhost:8000Then access the remove server from the client with the reverse tcp forwarding active.

Basically forwarding port 8000 on the remote host to port 8000 on the local ssh client.ssh -R 8000:localhost:8000 remote_ipBut since I was connecting with a windows machine I used putty instead.

This is how you set up the tcp forwarding:

It seems like you can not only forward one port, but many ports and in whatever direction you want at the same time.

To try that you have things working:

wget localhost:8000In my case I wanted apt package manager to use the forwarded port so I just changed it to use http://localhost:8000 to access the packages.

Right you can name any number of ports. If you want to do dynamic tunneling you can pass a -D and use the remote host as a SOCKS proxy. Then only define the one port for the proxy in your browser or wherever.

SSH is pretty awesome.

@Dashrender said in Managing spam posting:

@stacksofplates said in Managing spam posting:

Jeff Feeling write an awesome module for Drupal called Honeypot. It adds a field to the register page that when it's filled out the system ignores the registration. That field is hidden with CSS so normal people don't see it but the boys fill it out because it's a field. Not sure if NodeBB had anything like that at all though.

Sounds like something that could be easily detected, aka avoid, by bots.

It works really well. You can name the field whatever you want so it's not like it's a default name for every site. It also throws out a registration if it takes longer than the time you define. I think the default is 5 seconds. These methods together catch a ton of bots in my experience.