ProxMox Storage Configuration Question (idk how lol)

-

@travisdh1 said in ProxMox Storage Configuration Question (idk how lol):

They've completely bought into the cult of ZFS and the Windows world of "software RAID is bad".

It's weird because they push software RAID, just not good software RAID that's baked in. Only software RAID from a system that isn't native nor stable on Linux. Ugh.

-

@GUIn00b said in ProxMox Storage Configuration Question (idk how lol):

Any advice is appreciated.

what is the point of this host?

how important is your data?

why use software raid?

who will support it if you are not there?

do they actually understand anything?I use hardware raid 100% in client systems. Why? because bespoke is bad. Software raid is bespoke. No matter how old and well documented it is, it is something that no one in the majority deals with. MDADM or ZFS or whatever, it matters not.

I don't use it (hardware raid) because it is better, I use it because it is more easily understood and supported by the majority.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

Software raid is bespoke.

That's true in the case of ProxMox with ZFS, because ZFS isn't native nor stable on that platform nor is there any team that makes that work. It's a product from one world shoehorned to allowed it to run (possibly in license violation) in another and there is no official support, testing, or anything from any vendor or team.

But MD is way, way the opposite. MD is as enterprise and anti-bespoke as it gets. It's part of the base OS, it is the most reliable RAID system out there.

Technically, using a third party external hardware replacement for the OS' own tooling is far more bespoke than using MD internally.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

I don't use it (hardware raid) because it is better, I use it because it is more easily understood and supported by the majority.

When non-IT people need to interact, it's better. That's why we use it. We don't want customers thinking that they can do stuff without IT and causing damage. It means we can send a middle schooler in to change drives without needing to coordinate on the timing. It means some random drive delivery guy can do the drive swap without asking.

It costs a lot more. It lowers performance. But the blind swap value for customers without someone technical making sure random people aren't touching servers when they aren't supposed to is a big deal.

However, all those non-technical people are still hitting the power button, pulling cables, spilling coffee... so I don't know if it has ever protected us, lol.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

I use it because it is more easily understood and supported by the majority.

In general, I actually think this is a negative. Making systems that are "easy for people who don't know what they are doing to pretend that they do" is one of the biggest causes of problems I find in customer systems.

"Oh, I thought I could just change how these things work and..." now they have no backups, no they are offline, now their data is corrupt, etc. etc.

The appearance of being accessible without knowledge encourages the Jurassic Park Effect.

-

@scottalanmiller said in ProxMox Storage Configuration Question (idk how lol):

It means some random drive delivery guy can do the drive swap without asking.

It means the "dell" or "hp" tech that is just a random contractor can swap the drive under the warranty support wihtout needing us to deal with anything more than checking that the autorebuild started in the controller.

-

@scottalanmiller said in ProxMox Storage Configuration Question (idk how lol):

In general, I actually think this is a negative. Making systems that are "easy for people who don't know what they are doing to pretend that they do" is one of the biggest causes of problems I find in customer systems.

No one knows how to use MD or ZFS. Instead they go to Google-sensei.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

@scottalanmiller said in ProxMox Storage Configuration Question (idk how lol):

In general, I actually think this is a negative. Making systems that are "easy for people who don't know what they are doing to pretend that they do" is one of the biggest causes of problems I find in customer systems.

No one knows how to use MD or ZFS. Instead they go to Google-sensei.

End users, sure. Sales people, sure. But if you have an IT team, you are good to go. Having to pay hundreds of dollars for lower reliability, lower performance solutions to allow shops without IT to pretend to keep themselves safe is a penalty for people who don't want skilled labor. But overall, just hiring an IT team or having a qualified IT department makes far more sense. You get more protection and often actually costs less. There's no shortage of IT people.

I'm a huge believer in doing a good job and if the customer screws up not caring, that's on them. But intentionally doing a bad just assuming the customer is an idiot makes it my fault.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

@scottalanmiller said in ProxMox Storage Configuration Question (idk how lol):

It means some random drive delivery guy can do the drive swap without asking.

It means the "dell" or "hp" tech that is just a random contractor can swap the drive under the warranty support wihtout needing us to deal with anything more than checking that the autorebuild started in the controller.

Right. Not something I recommend doing, ever. Because that's the same people who pull the wrong drive or the right drive from teh wrong server. It's a great idea, and that's why we normally do it for really small shops. But it carries a lot of dangers of its own because it encourages people to make big hardware changes without asking.

Seen a LOT of data loss causes by making this seem like a good idea.

-

@JaredBusch said in ProxMox Storage Configuration Question (idk how lol):

@GUIn00b said in ProxMox Storage Configuration Question (idk how lol):

Any advice is appreciated.

what is the point of this host?

how important is your data?

why use software raid?

who will support it if you are not there?

do they actually understand anything?I use hardware raid 100% in client systems. Why? because bespoke is bad. Software raid is bespoke. No matter how old and well documented it is, it is something that no one in the majority deals with. MDADM or ZFS or whatever, it matters not.

I don't use it (hardware raid) because it is better, I use it because it is more easily understood and supported by the majority.

-

It's my home hypervisor for hosting my home things but it's also serving as a learning tool as I'm a veteran of M$ systems management/consulting and wish to build a comparable mastery skillset in all things Linux.

-

It's important enough I don't want to have to rebuild this over and over. I like the idea of LVM offering snapshotting (similar to M$ Shadow Copy) so I have a little fail-safe there. Also using RAID serves as fortified storage. I do plan to implement backups once I have some things on here built that are worth backing up. Not only will this be a hypervisor I can spin up various things for tinkering/learning, but it will host my personal media and documents as well as provide various services to my house.

-

Because technology has reached a point that hardware RAID largely doesn't have the necessity at these lower levels that it used to. It's also going to be a working model of advanced storage configuration for me to build my home infrastructure upon and experience again counting toward building that Linux experience.

-

I presume this is from a "you're no longer around" premise. Being my personal home system, if I'm "no longer around" (hit by a bus? lol), I'm not really too concerned about it.

-

No, I don't.

-

-

My apologies and thanks to all for the feedback. Life decided to drive a Mack truck with no brakes through my world this past week or so so I've been MIA.

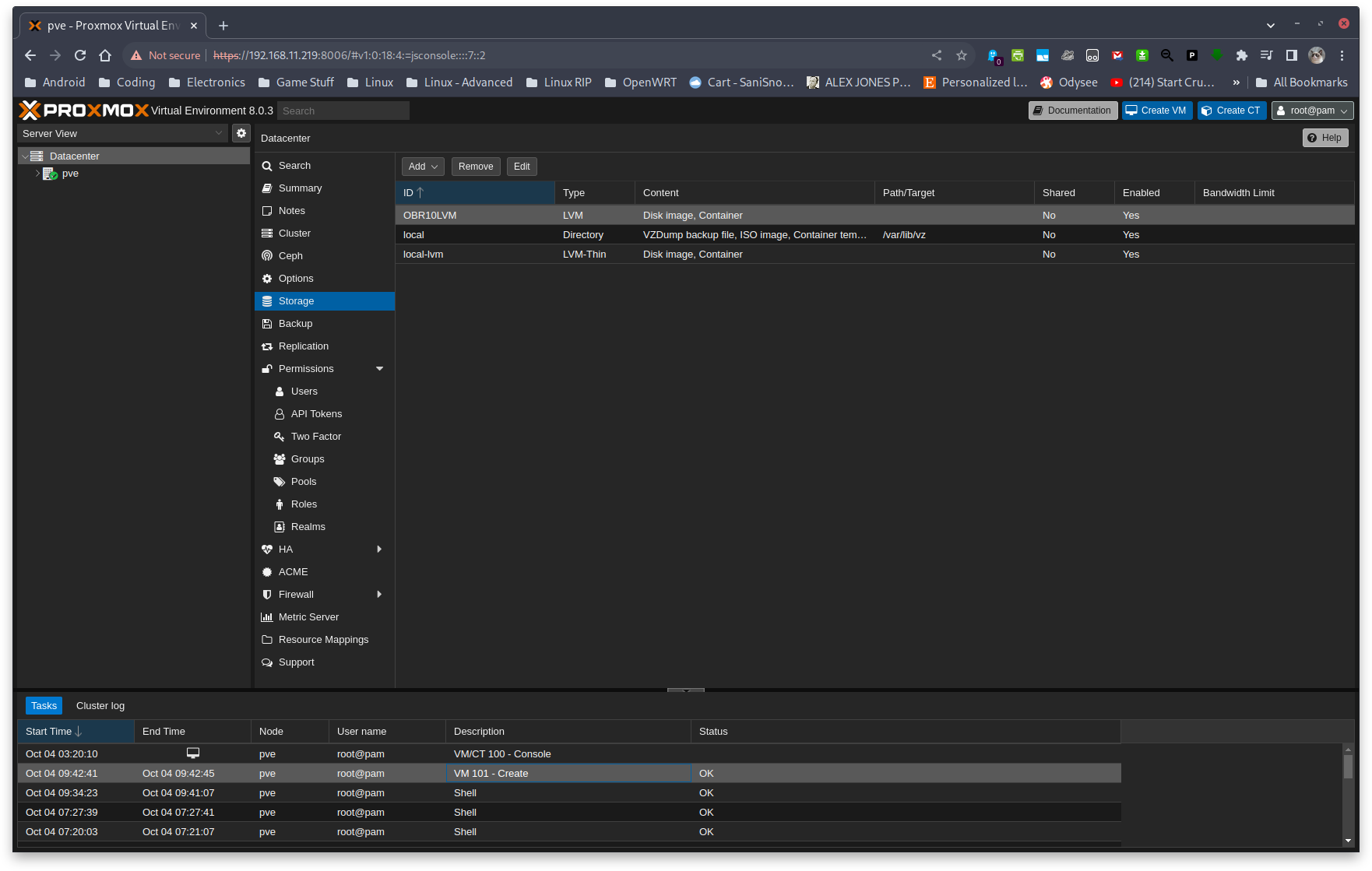

I went ahead and blew away that mdadmin setup and used LVM2 (I think LVM2?) to create the RAID. Here's the console outputses:

root@pve:/# lvcreate --type raid10 -l 100%FREE --stripesize 2048k --name LVOBR10 OBR10 Logical volume "LVOBR10" created. root@pve:/# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert LVOBR10 OBR10 rwi-a-r--- <7.28t 0.00 data pve twi-a-tz-- <141.23g 0.00 1.13 root pve -wi-ao---- <69.37g swap pve -wi-ao---- 8.00g root@pve:/#root@pve:~# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sda 8:0 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_0 253:5 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm └─OBR10-LVOBR10_rimage_0 253:6 0 3.6T 0 lvm └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm sdb 8:16 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_1 253:7 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm └─OBR10-LVOBR10_rimage_1 253:8 0 3.6T 0 lvm └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm sdc 8:32 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_2 253:9 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm └─OBR10-LVOBR10_rimage_2 253:10 0 3.6T 0 lvm └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm sdd 8:48 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_3 253:11 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm └─OBR10-LVOBR10_rimage_3 253:12 0 3.6T 0 lvm └─OBR10-LVOBR10 253:13 0 7.3T 0 lvm nvme0n1 259:0 0 238.5G 0 disk ├─nvme0n1p1 259:1 0 1007K 0 part ├─nvme0n1p2 259:2 0 1G 0 part /boot/efi └─nvme0n1p3 259:3 0 237.5G 0 part ├─pve-swap 253:0 0 8G 0 lvm [SWAP] ├─pve-root 253:1 0 69.4G 0 lvm / ├─pve-data_tmeta 253:2 0 1.4G 0 lvm │ └─pve-data 253:4 0 141.2G 0 lvm └─pve-data_tdata 253:3 0 141.2G 0 lvm └─pve-data 253:4 0 141.2G 0 lvm root@pve:~# df -h Filesystem Size Used Avail Use% Mounted on udev 32G 0 32G 0% /dev tmpfs 6.3G 1.9M 6.3G 1% /run /dev/mapper/pve-root 68G 2.9G 62G 5% / tmpfs 32G 46M 32G 1% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock /dev/nvme0n1p2 1022M 344K 1022M 1% /boot/efi /dev/fuse 128M 16K 128M 1% /etc/pve tmpfs 6.3G 0 6.3G 0% /run/user/0 root@pve:~#It's not LVM-thin and I don't know if it should be or not. From a "learn things one step at a time" perspective, this seems straight-forward and I grasp it conceptually. I'll do the LVM-thin thing down the road probably. My only pending curiosity on this config is did I select a good stripe size? Default is 64kb but I read somewhere that for this it should be 2048. I have no idea lol so I went with 2048.

Next is to setup a Fedora server VM.

-

I'm missing something again. When I tried to create a VM and use that as the location to store the VM's virtual disk, it generated an error saying there was no free space. I'm going to put on some easy listening circus music while I Google and read some more for now!

-

I think I fixed it lol but please if anyone sees otherwise, let me know. It's a new adventure for me!

root@pve:~# lvcreate --type raid10 --size 7t --stripesize 2048k --name LVOBR10 OBR10 Logical volume "LVOBR10" created. root@pve:~# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert LVOBR10 OBR10 rwi-a-r--- 7.00t 0.00 data pve twi-aotz-- <141.23g 1.60 1.18 root pve -wi-ao---- <69.37g swap pve -wi-ao---- 8.00g vm-100-disk-0 pve Vwi-aotz-- 80.00g data 2.82 root@pve:~# vgs VG #PV #LV #SN Attr VSize VFree OBR10 4 1 0 wz--n- 14.55t 568.06g pve 1 4 0 wz--n- 237.47g 16.00g root@pve:~# pvs PV VG Fmt Attr PSize PFree /dev/nvme0n1p3 pve lvm2 a-- 237.47g 16.00g /dev/sda OBR10 lvm2 a-- <3.64t <142.02g /dev/sdb OBR10 lvm2 a-- <3.64t <142.02g /dev/sdc OBR10 lvm2 a-- <3.64t <142.02g /dev/sdd OBR10 lvm2 a-- <3.64t <142.02g root@pve:~# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS sda 8:0 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_0 253:5 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7T 0 lvm └─OBR10-LVOBR10_rimage_0 253:6 0 3.5T 0 lvm └─OBR10-LVOBR10 253:13 0 7T 0 lvm sdb 8:16 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_1 253:7 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7T 0 lvm └─OBR10-LVOBR10_rimage_1 253:8 0 3.5T 0 lvm └─OBR10-LVOBR10 253:13 0 7T 0 lvm sdc 8:32 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_2 253:9 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7T 0 lvm └─OBR10-LVOBR10_rimage_2 253:10 0 3.5T 0 lvm └─OBR10-LVOBR10 253:13 0 7T 0 lvm sdd 8:48 0 3.6T 0 disk ├─OBR10-LVOBR10_rmeta_3 253:11 0 4M 0 lvm │ └─OBR10-LVOBR10 253:13 0 7T 0 lvm └─OBR10-LVOBR10_rimage_3 253:12 0 3.5T 0 lvm └─OBR10-LVOBR10 253:13 0 7T 0 lvm nvme0n1 259:0 0 238.5G 0 disk ├─nvme0n1p1 259:1 0 1007K 0 part ├─nvme0n1p2 259:2 0 1G 0 part /boot/efi └─nvme0n1p3 259:3 0 237.5G 0 part ├─pve-swap 253:0 0 8G 0 lvm [SWAP] ├─pve-root 253:1 0 69.4G 0 lvm / ├─pve-data_tmeta 253:2 0 1.4G 0 lvm │ └─pve-data-tpool 253:4 0 141.2G 0 lvm │ ├─pve-data 253:14 0 141.2G 1 lvm │ └─pve-vm--100--disk--0 253:15 0 80G 0 lvm └─pve-data_tdata 253:3 0 141.2G 0 lvm └─pve-data-tpool 253:4 0 141.2G 0 lvm ├─pve-data 253:14 0 141.2G 1 lvm └─pve-vm--100--disk--0 253:15 0 80G 0 lvm root@pve:~# df -h Filesystem Size Used Avail Use% Mounted on udev 32G 0 32G 0% /dev tmpfs 6.3G 1.9M 6.3G 1% /run /dev/mapper/pve-root 68G 3.5G 61G 6% / tmpfs 32G 55M 32G 1% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock /dev/nvme0n1p2 1022M 344K 1022M 1% /boot/efi /dev/fuse 128M 16K 128M 1% /etc/pve tmpfs 6.3G 0 6.3G 0% /run/user/0 root@pve:~#

-

@GUIn00b Seems right. I'm old school and learned MD before the interface was added to LVM. It's all the same stuff, just new command line options. But it sure looks right to me.